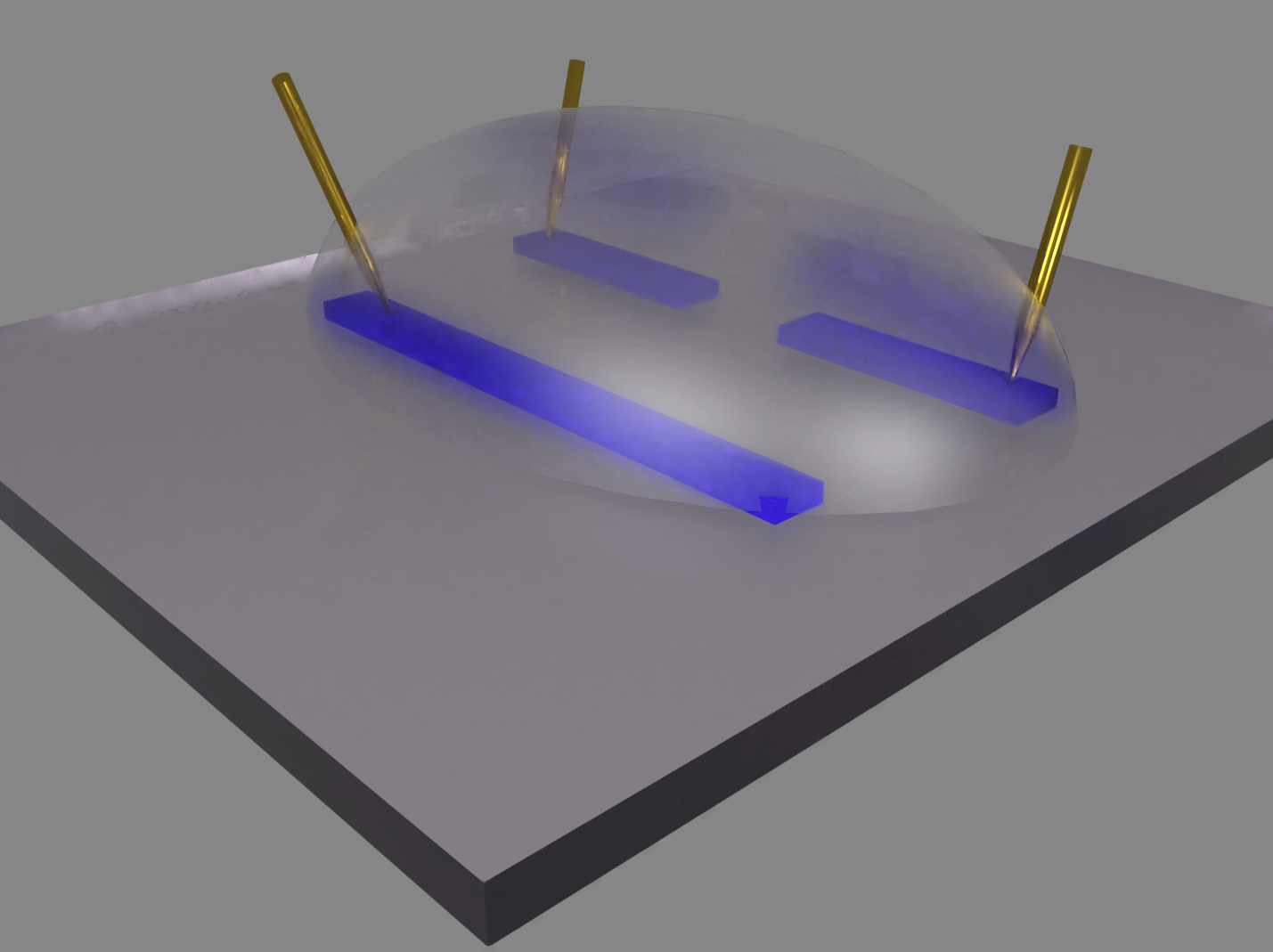

Organic electrochemical transistors for biosensing

Can "messy" materials sense better in messy environments?

Modern electronics are powered by billions of silicon transistors. However, these silicon devices are not necessarily biocompatible.

Potential Skills Developed: Electrochemistry, nanoscale fabrication and patterning, sensor design and fabrication.

Nanoscale force microscopy to improve sensors and solar cells

Feeling invisible atoms using (the) force

In force microscopy, magnetic or electrostatic forces between a nanometer-scale tip and a sample surface can produce nanometer-resolution images; however, linking the raw experimental data to specific sample properties can be challenging.

Potential Skills Developed: Python Programming, Supercomputing, LabView

Collaborators: The Marohn Group (Cornell University)

Virtual Labs in Chemical Education

All learning is forgotten, but leaves an imprint behind

ChemCollective has a variety of online virtual lab activities; we have worked with collaborators to add additional features to these virtual lab simulations.

Potential Skills Developed: Data analysis, JavaScript programming and Web Development, Software engineering (version control and unit testing), Educational Research Methodology

Collaborators: Dr. David Yaron and Dr. Gizelle Sherwood (Carnegie Mellon University)

Artificial Intelligence and Machine Learning Education

Our robot overlords are coming - or are they?

Applications of machine learning and artificial intelligence have grown dramatically in the last 10 years. No-code tools like Wekinator and Google's Teachable Machine help introduce these ideas and tools to non-specialists.

To see the power of AI and ML, we are developing desktop and web applications to allow students to build and use more models by swapping out different inputs and outputs. Here's a basic example where you can use a Teachable Machine Model that uses a webcam input to play different sounds (different outputs):

Potential Skills Developed: Programming (Python, JavaScript, or Java), Machine Learning and AI tools (Tensorflow, PyTorch, Weka / Wekinator)

Funding: XSEDE Startup Allocation on JetStream Cloud